Techniques to overcome atmospheric blurring in solar astronomy

Ground-based solar telescopes have given us amazing insight into much of our sun’s activity, but atmospheric turbulence, caused by temperature and density differences in our atmosphere, creates distortion and blurring of images as they’re received on Earth. A number of solutions to this have been implemented, with a range of success.

Adaptive optics

Adaptive optics (AO) is a pre-processing technique requiring the use of a wavefront sensor to measure the distortions of incoming waves and deformable mirrors or a liquid crystal display to then compensate for, and correct, these distortions. We’ve supplied frame grabbers for one of these projects at the USA’s National Solar Observatory, you can read more about that here. However, the cost of components required for such a system can be prohibitive to research projects, and residual image degradations still remain a factor in some cases.

Lucky imaging

Post-processing can be carried out on blurred image data to try and achieve a high-resolution image. Speckle imaging is a technique which involves taking numerous short-exposure pictures that “freeze” the atmosphere. We’ve looked at lucky imaging, a particular method of speckle imaging, in a previous article. Images are taken with a high-speed camera using exposure times short enough (100ms or less) to ensure the changes in the Earth’s atmosphere during the exposure are negligible. If thousands of images are taken, there are likely to be a number of frames where the object in question is in sharp focus due to the probability of having less atmospheric turbulence during the short exposure period of the “lucky” frame. By taking the very best images, for example 1% of the images taken, and combining them into a single image by shifting and adding the individual short exposure pictures, “lucky imaging” can reach the best resolution possible. Originally, objects captured at these exposure times needed to be very bright but the adoption of CCDs has made this process more widely used.

Multi-Frame Blind Deconvolution (MFBD)

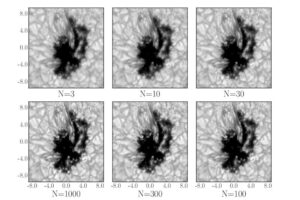

MFBD is a further numerical technique that models and removes these residual image degradations, allowing observers to increase the amount of information they can extract from their image data. An observed image is the true image convolved with the unknown point spread function, or PSF (the blur), created by the atmosphere and telescope. With the help of MFBD, astronomers attempt to calculate PSF as close to reality as possible with the help of a set of image frames.

In this technique, the more frames acquired, the better the average and, of course, the better the resulting image, so a very fast method of capturing frames is required. Michiel van Noort, a scientist with the Max Planck Institute for Solar System Research, has been working with our FireBird Quad CXP-6 CoaXPress frame grabber and our FireBird Camera Link 80-bit (Deca) frame grabbers, to obtain accurate, real-time performance and unlock enhanced solar imaging.

Michiel is now using the FireBird CXP acquisition card to grab frames from the latest generation of large format image sensors from AMS/CMOSIS (CMV 12000, CMV20000 and CMV50000) needed for a new type of high-resolution hyperspectral instrument. Such instruments require very large detectors, but also a high frame rate to “freeze” the Earth’s atmosphere, which tests our boards to their limits. This is how he explains the processes:

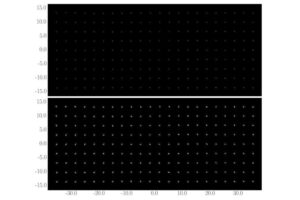

“The hyperspectral instrument I’m using is called an integral field spectrograph, which differs from a traditional long-slit spectrograph by trying to recover high resolution spectral information not only along a one-dimensional slit, but over a two-dimensional field, without any scanning or tuning. To do this, the light in the focal plane is “reformatted” in such a way, that space is created between the image elements, in which the spectral information can be dispersed. The Microlensed Hyperspectral Imager (MiHI) does this, as the name suggests, using a double-sided microlens array.

“This works very well, but it generates a major challenge: even for a small field of view (say, 256×256 pixels), if you want to capture 256 spectral elements, you actually need to capture 16M pieces of information. On top of that, you need to critically sample each of these elements with at least 4 pixels, to avoid confusing the information from different pixels. This is why such instruments need very large sensors, and the atmospheric turbulence needs them to be fast as well (100fps is ideal, but difficult to attain). The MiHI has 128×128 image elements (so-called spaxels – pixels that can move in three spatial dimensions), and has about 324 spectral elements, and that needs more than 20Mpx, which I couldn’t really get earlier in the process, but now it’s no problem.”

As you can see from Fig. 1, there’s a direct correlation between frames and result: more frames give a better average leading to better results.

We’re proud that our industry-leading products are contributing to scientists’ knowledge of our sun’s full variety of observable and measurable phenomena. But we also customize image capture and processing products for more down-to-earth applications including industrial inspection, medical imaging, surveillance and more. From space missions to large scale deployment of industrial vision systems, we’ve provided imaging components and embedded systems that help our customers provide world-class solutions. Get in touch with your vision challenges to hear more.

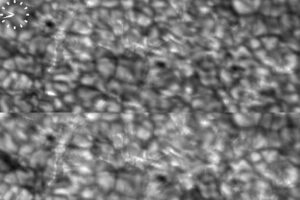

Featured image credit: IAC.